Robotics as a control problem

Robotics as a control problem

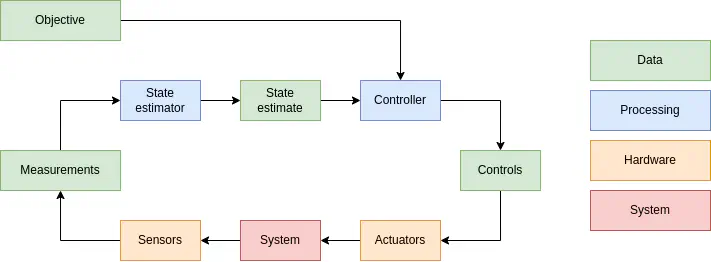

Fundamentally robotics involves a sense-think-act loop, where a set of actuators are controlled using feedback from sensors in order to achieve a desired state of the system.

This consists of two parts:

- A controller: Given the current state and an objective, what inputs should be chosen to achieve this objective?

- A state estimator: Using the set of measurements received, what is the best estimate of the system state?

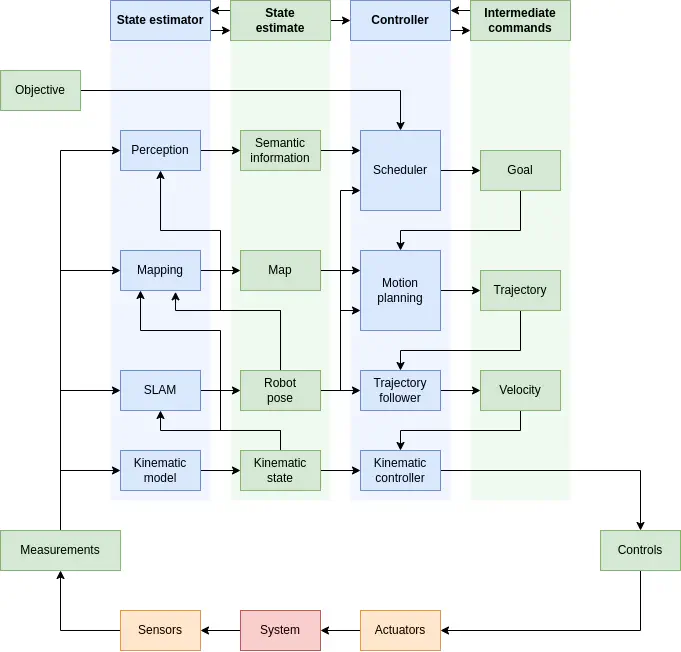

In a general robotics problem, this is decomposed into various sub-problems.

An example system might look something like this:

Components of state

Different parts of the state estimate have very different properties. This can include:

- The kinematic state of the robot (joint positions, velocities, accelerations).

- The robot pose in the environment.

- A description of the environment.

- Semantic information about the environment (eg: identified objects).

Each part require different state estimation methods.

Secondly, the controller can be decomposed into a number of processes, each providing an intermediate command. Some examples are:

- A velocity command for the mobile base.

- A desired trajectory for the mobile base to follow (or for an arm or manipulator).

- A desired goal pose/configuration for the robot which it needs to find a path towards.

What makes robotics difficult

Although this definition is suitable, what differentiates robotics from classical control is the introduction of various “complications”:

- Non-linear state equations: Cannot use linear control theory, so may require specific control policies, such as for non-holonomic mobile robots, in order to guarantee convergence to the objective.

- Complex state: In addition to the robot pose, it may need to estimate it’s environment, which cannot be represented as easily.

- Complex configuration constraints: Need to follow state trajectories that satisfy complex configuration constraints (eg: avoid obstcales), so require some form of planning. We may also have input constraints that need to be satisfied.

- Non-linear sensors: Sensors don’t give simple measurements, such as cameras or range-based sensors, so linear estimators aren’t suitable.

- Linearly unobservable systems: There is insufficient sensor information to know the full system state at any point, so more complex inference algorithms are required to estimate state, such as SLAM.

Designing software for robots

Ideally, we would have a magic computer that can do all processing instantaneously. On a real computer, processing takes time.

The different processing blocks (in blue) are going to be referred to as components.

Based on the diagram above, we can make a number of observations:

- The components are largely independent. Data is exchanged between components, but it doesn’t need to be available to all parts of the system at all times.

- Some components are more computationally expensive (eg: SLAM) but may also have reduced update frequency requirements.

- Some data is particularly large (eg: map) so care needs to be taken in how this is exchanged between components.

From this, there are two conclusions:

- Run components in parallel. This will allow different components to update at their own rates, as well as making everything run faster.

- We need strategies for exchanging data between components, but need to take care for particularly large bits of data.

The exact strategy for exchanging data depends on how the components are parallelised.

Other requirements

There are many other things that go into building a robot, which further complicate the whole system:

- Actually building the hardware and interfacing with it

- Configuring all the different components, allowing tweaking of parameters at runtime, saving and loading these parameters.

- Modelling a robot.

- Providing sufficient simulation tools, so things can be tested without the real robot.

- Providing data recording and playback tool.

- System identification: fitting parameters based on measured data.

- Allowing different configurations for testing different parts of the system.

- Providing introspection tools to see the data being exchanged between components and the state of the components.

- Providing GUI and teleoperation tools for a user to control the robot and view the state.