Mobile robotics university module - ROS, localisation and path planning

Posted on April 2, 2021 by Zach Lambert ‐ 2 min read

Another robotics module I took during my final year of university was on mobile robotics in particular. This gave an introduction to the following concepts:

- Localisation with the extended kalman filter and particle filters

- Path planning with potential field methods, RRT and RRT*.

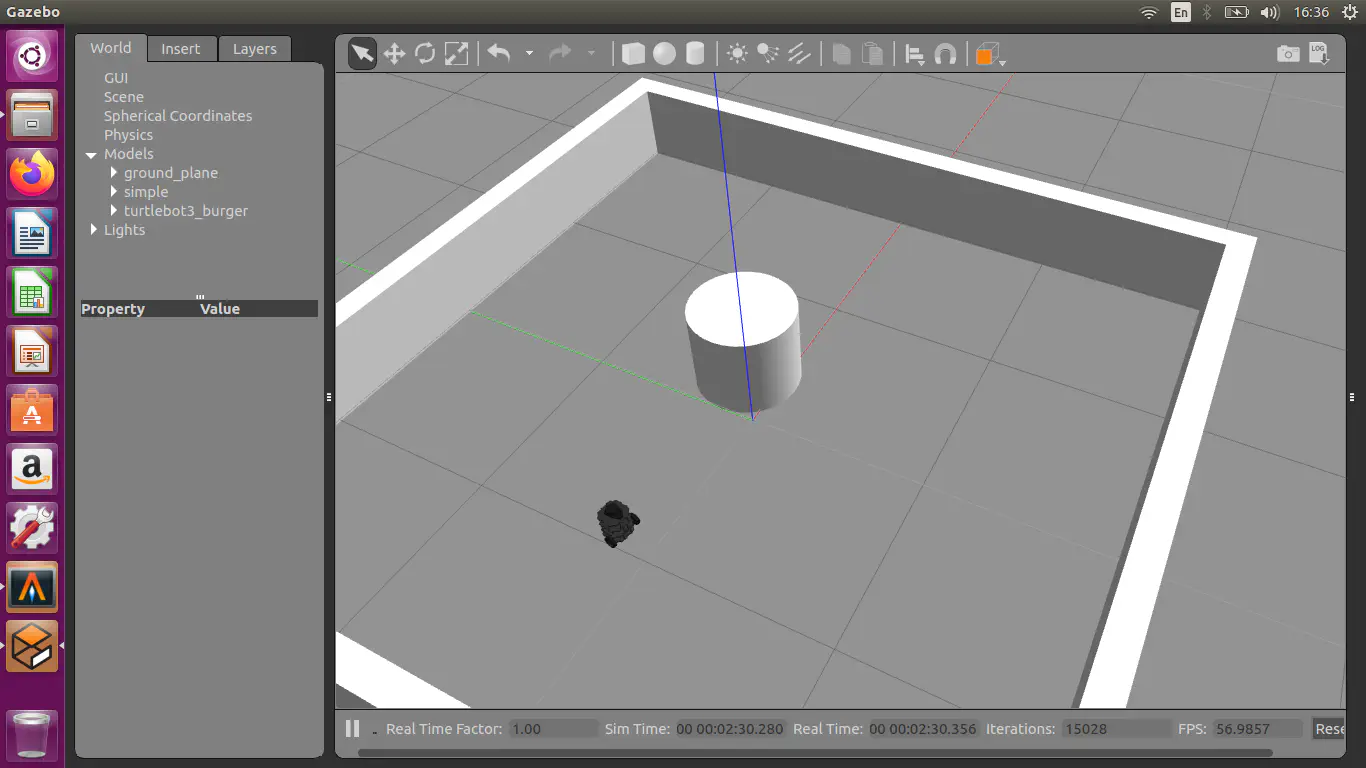

This was used with python, ROS and Gazebo.

Assignment 1 - Simple obstacle avoidance and the particle filter

This assignment involved running the turtlebot sim in ROS and doing some simple tasks.

The first task was simple obstacle avoidance using the braitenberg vehicle, illustrating that simple control policies can be effective.

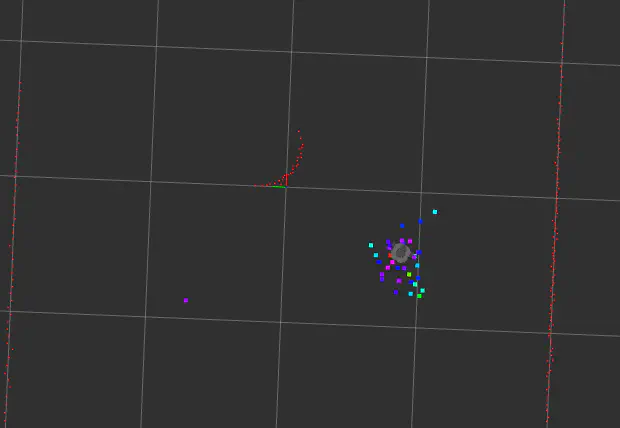

The second was more interesting, and used the particle filter with lidar data and a known map to do localisation. When first initialised, the robot had multiple hypothesis of the pose due to the environment being relatively homogeneous, however once it observed the centre obstacle, the likelihood of the incorrect hypothesis became small and the robot found the correct hypothesis.

Assignment 2 - Potential field methods and RRT for navigation

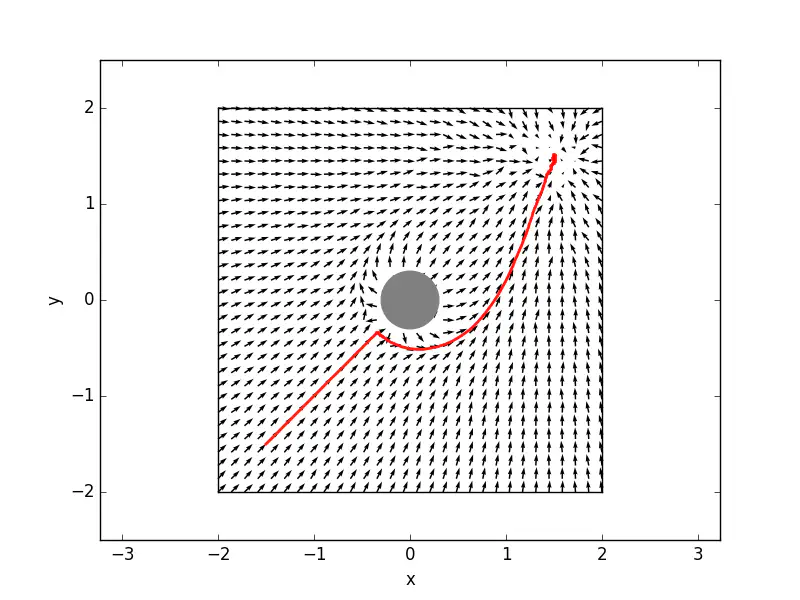

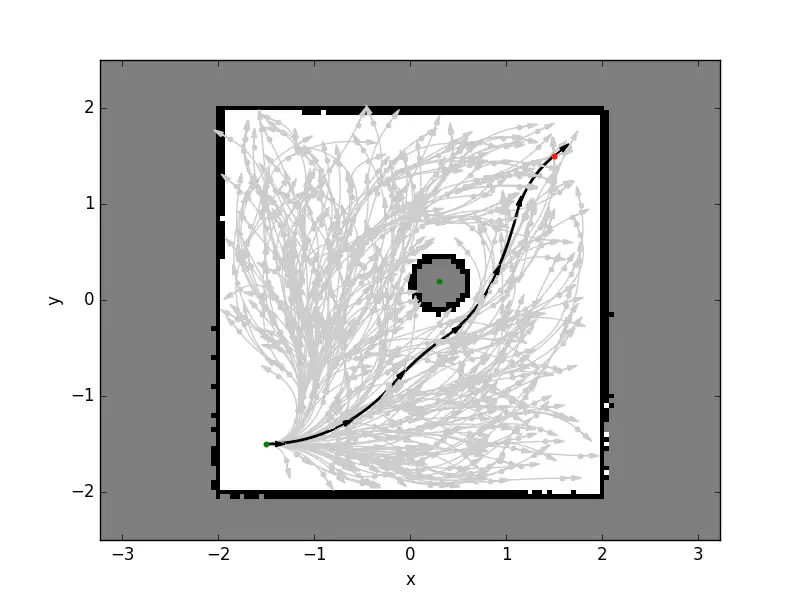

The second assignment focused on path planning.

The first concept introduced was navigation with the potential field method, again illustrating how a simple solution can give reasonable results, although it is only really suitable for convex environments.

Following this, we looked at using RRT for path planning and extending this to RRT* that it provided better guarantees on optimality.

Assignment 3 - Potential field method for navigation in 3D

The final assignment was selected from a list of options. My partner and I went with a “spaceship navigation” task. This looked at two additional ideas:

- Modifying the potential field to handle moving obstacles

- Navigating in 3D space in addition to 2D space

- Training a neural network to replicate the control policy, with the input being the robot pose and list of obstacles.

Code for this was written in python, using pytorch for the neural network, and pygame for visualisation. I focused on the simulation and “analytical” potential field code and my partner focused on the neural network code.